Case Study B: Revisiting the Peanuts and Apples Problem

In a Substack article I described another precursor swarm, this problem is often sometimes thought of as a Turing test for LLMs but can be solved with multi-agent technology.

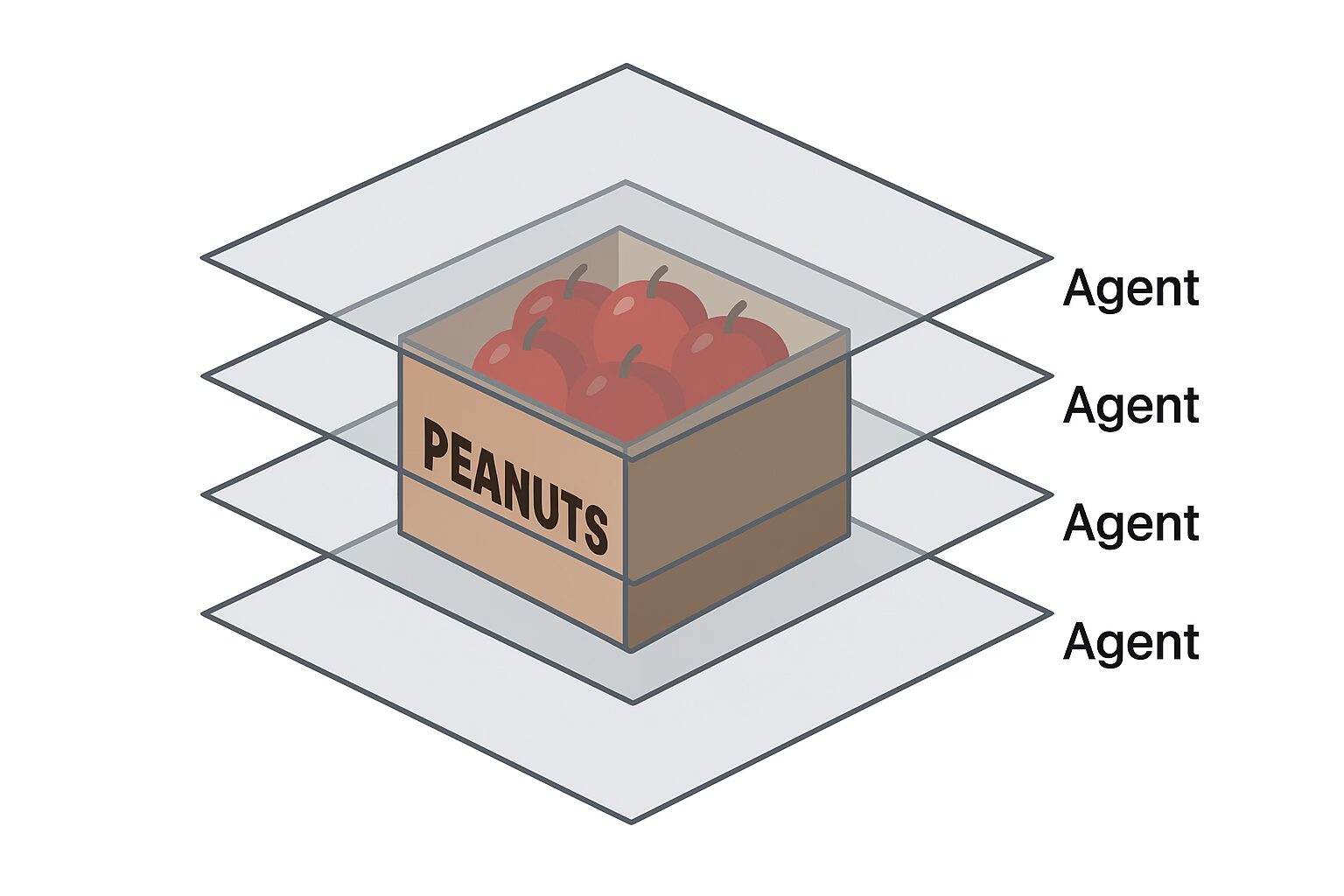

Layers and Peanuts

Another common “unsolvable” problem may actually be solvable with advanced LLMs that employ agents. I explored how this may be solved in depth in a previous article, but to summarize:

Imagine a box labeled “Peanuts” contains apples. Asking an LLM “What’s in the box?” and “What’s wrong with this picture?” often yields shallow or wrong answers.

A human would:

-

See the box contains apples

-

Note the label says “Peanuts”

-

Conclude the contents and the label mismatch — either the label is wrong, or the contents are.

Parallel Processing with Agents

Imagine it like a CNN: the input is processed through a series of layered agents.

-

One detects the label

-

One examines the contents

-

One compares the two

Reasoning propagates forward while feedback flows backward like error correction. By deploying agents that examine one layer at a time and propagate results backward or forward, the final result is an aggregate of information — not just about the box, but the environment it lives in.

The Aggregate Is What Matters

Whether the scan goes top-down, bottom-up, or diagonally through layers, the aggregated insight is what counts.

Core Points:

-

Outcome-Centric Design: The system must identify the label, contents, and mismatch

-

Layer Scanning Order Is Secondary: Any valid scan path yields the same conclusion

-

Aggregated Reasoning Flow: Each layer populates data into a shared reasoning context